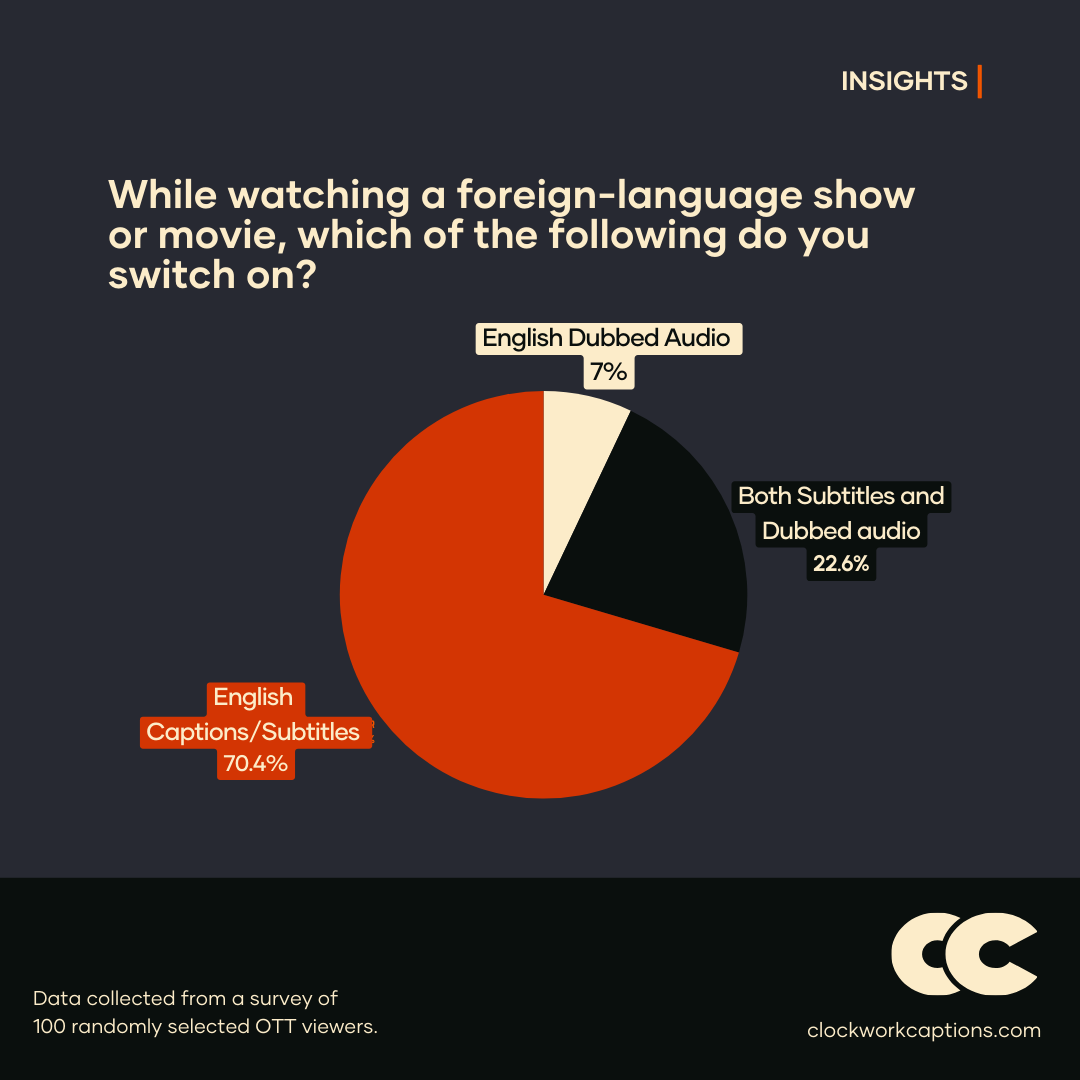

The entertainment industry is going global, and so is the demand for multilingual content. To get a pulse on viewer preferences, our marketing team recently surveyed a Reddit community, asking:

The results align with what many have observed about audience preferences: viewers still largely favour subtitles (70.42%) over dubbed audio (7.04%) when watching foreign-language content. This preference for subtitles highlights the lack of high-quality dubbed content.

While traditional human dubbing remains the gold standard, its cost and time intensiveness have spurred exploration into AI-powered alternatives. But, is AI dubbing at a stage where it can be utilized to deliver optimal viewer experience? At Clockwork Captions, we wanted to find out.

Our experiment: Learning through real-world application

Recently, we embarked on a journey to explore the world of AI dubbing for a popular YouTube content creator. With a plethora of options available, we dove deep into the process, testing the top five contenders. Let's uncover the hilarious mishaps and challenges we encountered along the way.

Voice Modulation: Initially, we were impressed by the AI's ability to replicate voices. Short clips were no problem, but longer videos revealed a different story. The AI's voice became monotonous and repetitive, which can quickly lose viewers' interest.

Capturing Expressions, Laughter, and More: AI struggles to capture the nuances of human emotion, like laughter and excitement. Watching a video without these elements is like eating a bland sandwich. The AI's voice might sound emotionless, even when the character is laughing, creating a jarring experience for viewers.

Lip Sync: AI can achieve near-perfect lip sync, but only under specific conditions. If the speaker is looking directly at the camera, the AI can sync their lips accurately. However, if the speaker turns away or is seen from a side angle, the AI's lip sync can become comical and inaccurate. And when it comes to multi-speaker videos, like podcasts, the AI struggles significantly.

Timing, Translation, and Transcription (for the script): AI can perform timing, translation, and transcription, but it's like having a friend who needs constant supervision. It requires a lot of effort to correct AI's errors, so it might be more efficient to have a human handle these tasks.

Audio Speed: The AI's speed was inconsistent. Depending on the amount of text, the AI would either rush through the audio or drag it out. We had audio that sounded like chipmunks on caffeine and others that felt like slow-motion dramatic monologues.

Wrong Pronunciation: AI might confuse similar-sounding words or struggle with complex phonetic patterns. This can lead to comical or jarring errors, such as mispronouncing common words or adding extra syllables.

Audio Gaps: When we synced the audio to the subtitles, everything seemed fine at first. But then, gaps started to appear, like plot holes in a bad movie. These gaps were incredibly awkward and jarring.

Multiple speakers, same voice: AI can sometimes struggle to distinguish between speakers, leading it to use the same voice for multiple characters. This can confuse viewers and make it difficult to follow the conversation.

Our verdict: The human touch remains irreplaceable… for now.

Our experience highlights the significant strides AI has made in dubbing technology. However, for projects demanding nuanced deliveries, emotional connection, and seamless lip-syncing, human dubbing remains the gold standard. While human dubbing carries a higher price tag, the human ability to understand and translate the emotional core of performance is unmatched by current AI.